III. From First Principles — A New Architecture and Roadmap for Scientific AI

“The task is not so much to see what no one has yet seen, but to think what nobody has yet thought, about that which everybody sees.” — often attributed to Erwin Schrödinger

Introduction

In my previous essay, From First Principles: Root Cause Analysis and Non-Consensus Findings, I argued that the crisis in scientific productivity is not a failure of biology, computation, or funding—it is a failure of architecture, cultural inertia, and poor incentive structures.

Science today runs on a pre-industrial operating system: fragmented datasets, artisanal workflows, and an N-of-1 economic model that guarantees Eroom’s Law will persist. Compute has scaled; knowledge has not.

This second essay turns from diagnosis to design. It lays out the first principles architecture for Scientific AI—a blueprint for reversing Eroom’s Law by industrializing the production of AI-native data, AI-enabled use cases, and agentic intelligence across the entire scientific value chain.

If the first post asked what went wrong, this one answers how to make it right.

I. The First Principles of Scientific AI

Having diagnosed the failure, we return to first principles.

Purpose: The goal of science is to transform data into knowledge and knowledge into health.

Constraint: The absence of a shared, interoperable architecture prevents compounding progress.

Requirement: To industrialize science, we must industrialize its data and workflows.

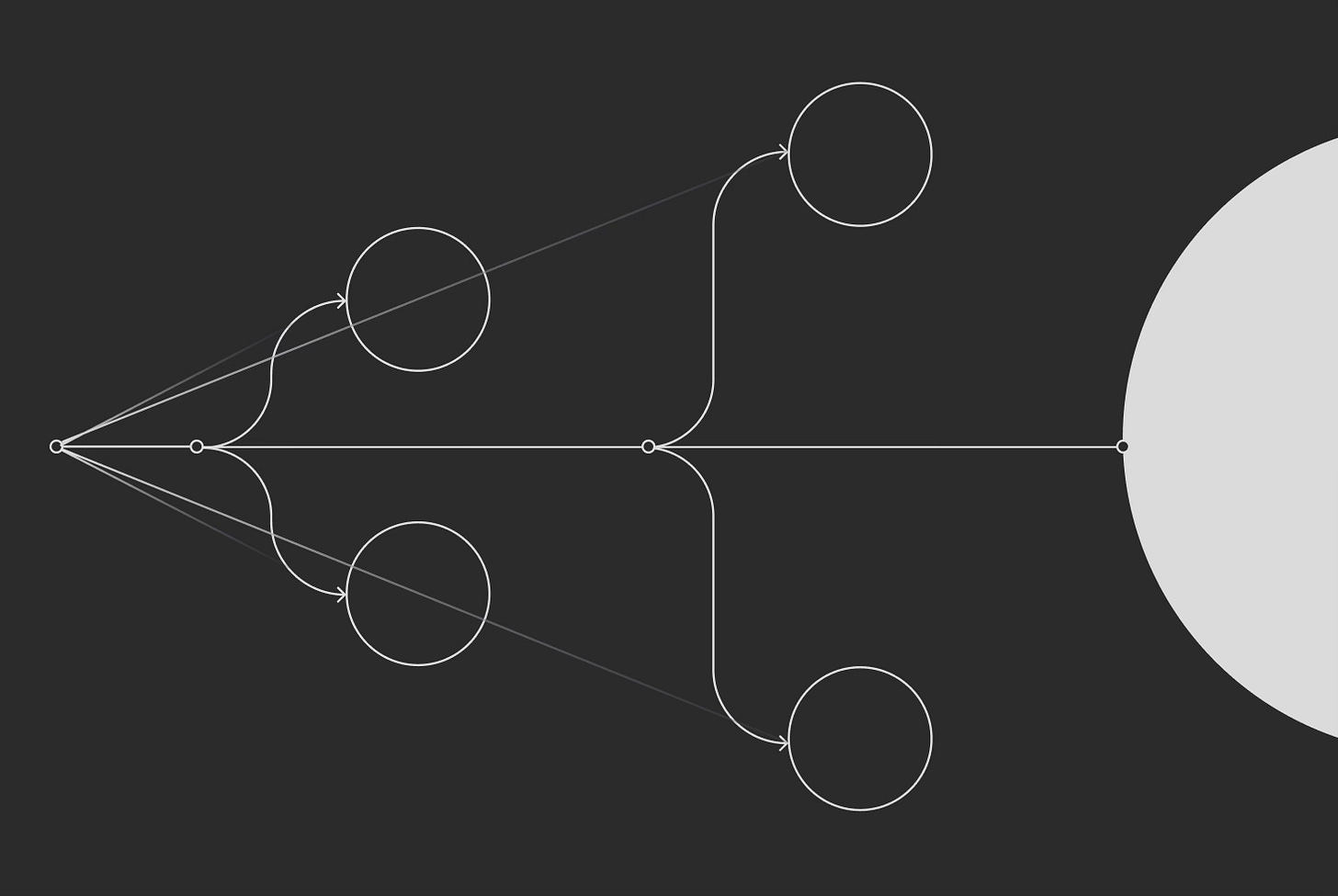

This leads to a new architecture—one designed from first principles for the age of AI: The Scientific Data Foundry, The Scientific Use Case Factory, Tetra AI, and Tetra Sciborgs.

II. The Scientific Data Foundry

Every scientific process begins and ends with data. Yet in today’s architecture, data are born in captivity. Instruments, ELNs, LIMS, and automation systems each generate their own proprietary formats, their own semantics, and their own dialects of meaning. What should be the raw material of intelligence becomes fragmented, opaque, and inert.

The Scientific Data Foundry exists to correct this failure at its source.

Every scientific process begins and ends with data.

Yet in today’s architecture, data are born in captivity.

It deconstructs proprietary and unstructured scientific data into atomic units — measurements, metadata, derived results, and instrument telemetry — and reconstructs them into AI-native schemas, taxonomies, and ontologies.

This is not ETL; it is epistemological engineering — transforming raw scientific exhaust into structured scientific fuel.

The Foundry provides:

Vendor neutrality – data liberated from proprietary formats and locked silos, enabling interoperability across instruments, vendors, and domains.

Composability – datasets that can be recombined and reused across experiments and programs; and even federated across organizations without loss of context.

Governance – rigorous lineage, quality assurance, and compliance, establishing scientific trust in every transformation and transaction.

Continuous improvement – schemas, taxonomies, and ontologies that evolve as scientific knowledge evolves, ensuring that data never ossify but continuously refine.

By standardizing structure and semantics at the point of creation, the Foundry industrializes the production of AI-native scientific data — the universal substrate upon which every downstream workflow, model, and agent depends.

It converts bespoke, artisanal inputs into machine-interpretable, continuously improving digital raw materials, unifying the world’s most heterogeneous data domain under a single, extensible architecture.

The Foundry is where science begins to scale — where data ceases to be a byproduct of experimentation and becomes the engine of discovery and development itself.

III. The Scientific Use Case Factory

Once scientific data are standardized and AI-native, they can finally be industrialized into intelligence.

For decades, every scientific workflow — from assay analysis to process optimization — has been treated as a one-off project. Each organization builds its own scripts, its own models, its own integrations, repeating the same work thousands of times across the industry. This bespoke, project-based paradigm is the digital equivalent of handcraft: artisanal, redundant, and unsustainable at scale.

The Scientific Use Case Factory replaces this inefficiency with an industrial production model for scientific intelligence.

It establishes a disciplined process by which AI-enabled workflows are designed, validated, productized, and continuously improved — transforming transient projects into durable, reusable assets.

Each use case — assay normalization, deviation detection, yield prediction, in-silico modeling, process analytics, or predictive maintenance — is built once, validated once, and then refined through collective learning.

As more data flow through the Foundry, the underlying models and workflows improve continuously, compounding in accuracy and performance.

The result is a library of modular scientific intelligence that can be configured, recombined, and deployed across discovery, development, and manufacturing — delivering exponential leverage rather than linear effort.

The Factory provides:

Standardized reuse – once a use case is proven, it becomes a shareable product across teams, sites, and even enterprises.

Continuous improvement – every execution becomes an opportunity for feedback, learning, and optimization.

Quality at scale – validation and compliance are embedded in the production pipeline, ensuring reliability and reproducibility.

Economies of knowledge – marginal cost falls while insight density rises, creating a flywheel of cumulative learning.

The Factory converts scientific knowledge from a project into a product, and from a cost center into a compounding asset.

When the Foundry industrializes data and the Factory industrializes intelligence, knowledge itself becomes infrastructure — a shared, ever-improving foundation that accelerates every subsequent experiment, program, and therapy.

This is how science begins to compound: when every new use case enriches the next, when every experiment feeds a global learning system, and when discovery becomes not a sequence of projects but an engine of continuous improvement.

IV. Tetra AI

Only when scientific data are standardized in the Foundry and use cases and workflows industrialized in the Factory, does a higher-order capability become possible: intelligence that understands science in context.

For decades, scientific software has been procedural — it automates steps, logs results, and renders visualizations. It executes, but it does not understand. It lacks the ability to reason across experiments, infer causal relationships, or synthesize insight across domains.

Tetra AI transforms this limitation. It is the reasoning and orchestration layer of the Scientific AI Architecture — the connective intelligence that unites data, workflows, and human expertise into a continuous, self-improving system of discovery.

This is not another “AI wrapper” or “LLM copilot.”

Those tools are linguistic façades — impressive at generating text or code, but epistemically hollow. They lack grounding in the semantics, ontology, and causal logic of scientific data. They can summarize, but not truly understand. They can predict words, but not phenomena.

Scientific intelligence demands a different foundation, one rooted in the structured reality of experiments, processes, and materials.

Tetra AI builds directly upon the Scientific Data Foundry and the Scientific Use Case Factory, because agents require order to act intelligently. Anyone that suggests otherwise is an AI tourist and not a native. Beware.

Intelligent Agents require:

High-fidelity ontologies produced by the Foundry, rooted in the realities of Factory use cases and workflows, and leveraging the taxonomies and schemas industrialized in the Foundry, so that scientific concepts, measurements, and relationships are machine-interpretable.

Productized use cases and workflows created in the Factory, so that reasoning systems can navigate consistent patterns of action and decision.

Semantic and procedural consistency across these layers, so that intelligence can exploit the full context of scientific meaning rather than hallucinate around it.

When those prerequisites are met, Tetra AI becomes not a chatbot — but a scientific reasoning system:

Contextualized insight – interpreting data through the lens of its ontology and provenance, not as isolated tokens.

Integrated inference – connecting discoveries in early R&D to implications in process development and manufacturing.

Collaborative cognition – guiding scientists through complex reasoning chains, surfacing relevant experiments, predicting failure modes, and suggesting next actions grounded in empirical context.

Tetra AI is not language intelligence — it is Scientific Intelligence: built upon high-fidelity data, composable workflows, and reproducible ontologies. It does not mimic thought; it industrializes it.

Through the Foundry, the Factory, and Tetra AI, the scientific enterprise evolves from static documentation into dynamic cognition — an architecture where every experiment enriches the next, every model learns from real data, and every scientist works in concert with machine reasoning that actually understands science.

This is not automation. It is comprehension.

It is the emergence of scientific intelligence itself.

V. Tetra Sciborgs

Even the most elegant architecture fails without adoption. Technology alone cannot overcome inertia. The limiting factor in every scientific transformation is not the sophistication of the system, but the sociology of the institution.

That is why we created Tetra Sciborgs — cross-disciplinary scientist-engineer hybrids who embody the operating model of Scientific AI itself.

Sciborgs integrate scientific domain expertise, data engineering, and AI within agile, mission-driven teams. They are not consultants who write reports or developers who hand off code. They are builders and translators, embedding within partner organizations to operationalize the Foundry, scale the Factory, and orchestrate Tetra AI.

Each Sciborg team serves as both catalyst and connective tissue:

Catalyst, because they activate the new architecture — mapping data pipelines, validating ontologies, configuring workflows, and training models until the system becomes self-sustaining.

Connective tissue, because they bridge the persistent divide between IT and science, between data engineers and experimentalists, between ambition and implementation.

Sciborgs convert resistance into momentum. They teach organizations how to think and work natively in the new paradigm — where data are products, workflows are composable, and intelligence is continuous. They internalize scientific context and operational nuance, ensuring that transformation happens with scientists, not to them.

They also safeguard the cultural integrity of the movement. The Sciborg ethos mirrors the architecture they maintain: open, rigorous, collaborative, and continuously improving. They reject the project mindset — “deliver and depart” — in favor of an industrial model of continuous learning, refinement, and scale.

If the Foundry builds the substrate, the Factory builds the machinery, and Tetra AI supplies the intelligence, then the Sciborgs bring the system to life.

They are the living proof that Scientific AI is not a technology stack but a new operating system for science — one that fuses human creativity with machine precision, institutional memory with continuous innovation.

The future of science will not be built by data engineers alone, nor by scientists working in isolation, but by Sciborgs — agile, interdisciplinary teams who embody the convergence of both.

In them, the architecture becomes culture. In them, the replatforming of science becomes irreversible.

VI. The Inversion of Eroom’s Law

When the four pillars — the Foundry, the Factory, Tetra AI, and Sciborgs — interlock, the industry’s trajectory begins to bend.

The same forces that once compounded inefficiency now compound progress.

Data productivity compounds — every dataset improves the next, as standardized structures and shared ontologies allow knowledge to propagate rather than stagnate.

Use case velocity compounds — validated workflows scale across teams, sites, and partners, turning redundancy into leverage.

AI capability compounds — models learn collectively, grounded in consistent data and reproducible context.

Organizational capacity compounds — Sciborgs transmit best practices across programs, accelerating transformation through cultural osmosis.

The system begins to exhibit platform economies of scale — the same feedback loops that powered Moore’s Law now applied to the production of scientific knowledge.

For the first time in modern history, scientific progress can scale faster than its cost. Eroom’s curve doesn’t just flatten; it inverts. Science begins to compound.

VII. The Moral and Structural Imperative

Every redundant workflow delays a therapy.

Every locked dataset prolongs human suffering.

Every inefficiency represents a life not yet saved.

The crisis of scientific productivity is not simply a technical or economic failure. It is a moral failure of design — a system that traps human creativity inside architectures optimized for control rather than progress.

Replatforming science is not a commercial act. It is both a moral and economic imperative.

We owe it to every scientist, every patient, and every generation yet to come to replace a fragmented, artisanal past with a unified, industrial architecture that compounds knowledge as efficiently as silicon compounds computation.

If Moore’s Law gave humanity exponential compute, then Scientific AI must give humanity exponential understanding.

VIII. Closing — From First Principles

Six years ago, my co-founder and I set out to fundamentally reimagine how science could be conducted in the age of AI.

Our founding vision was simple yet audacious: to enable Scientific AI to solve humanity’s grand challenges.

We knew this would require more than algorithms. It would require architecture — one that unifies data, workflows, intelligence, and people into a continuously improving system of discovery.

That architecture now exists. It is early, imperfect, and rapidly evolving — but it is real.

The Foundry, the Factory, Tetra AI, and Sciborgs together form the first integrated operating system for science in the AI era.

We call it Scientific AI — an industrial architecture for the production of knowledge itself.

The age of artisanal science is ending. The age of industrialized, AI-native science has begun.

IX. Closing Synthesis — Architecture Is Destiny

Reversing Eroom’s Law will not be achieved through incremental projects, one-off integrations, or another generation of vendor slogans.

It demands a wholesale rearchitecture of science — from how data are generated to how intelligence is shared and applied.

The Scientific Data Foundry industrializes data creation.

The Scientific Use Case Factory industrializes intelligence.

Tetra AI industrializes reasoning.

Tetra Sciborgs industrialize adoption.

Together, they form a single compounding system — a scientific operating architecture built for openness, scale, and collective progress.

Architecture is destiny. And the destiny of science — if we build it right — is compounding intelligence in service of human progress.

Catch up with the series:

Next in the series:

What you’re outlining is the right direction: the next leap doesn’t come from pushing monolithic LLMs harder, but from re-architecting intelligence itself. A first-principles approach leads naturally to Modular Intelligence (MI).

MI treats the LLM as a reasoning primitive, then builds a full cognitive architecture around it:

• Goal/intent module: defines what the system is actually optimizing

• Constraint/ethics/regulation module: encodes hard boundaries up front

• Causal-modeling module: evaluates downstream effects and tradeoffs

• Verifier module: checks logic, factuality, and self-consistency

• Adversarial module: probes edge cases, failure modes, and exploits

• Memory/state module: maintains continuity and long-horizon coherence

This recreates the layered structure real decision systems rely on—planning, constraint checking, simulation, verification—rather than expecting a single stochastic model to perform all cognitive functions at once.

The payoff:

• behaviour becomes predictable and auditable,

• safety is enforced throughout the reasoning process, not at the output layer,

• the system gains upgrade-stability as underlying models change,

• and institutions get intelligence that is governed, modular, and composable, not a black box.

LLMs give us raw cognitive power.

Modular Intelligence provides the architecture that turns that power into reliable, controllable intelligence.

That’s the actual first-principles reset the field needs.